As Drawing images in Camera Mode Documentation in Zoom Apps, , I am trying to draw a image while in camera mode.

Steps to reproduce:

1.Start the rendering context in camera mode:

await zoomSdk.runRenderingContext({

view: "camera"

})

- Attempt to draw the image, eg:

const canvas = document.getElementById("canvas") as HTMLCanvasElement;

const ctx: CanvasRenderingContext2D = canvas.getContext("2d") as CanvasRenderingContext2D;

const img = new Image();

function draw(img: HTMLImageElement) {

canvas.width = 1280;

canvas.height = 720;

// draw image

ctx.drawImage(img, 0, 0);

// get image data from canvas

const imageData: ImageData = ctx.getImageData(0, 0, canvas.width, canvas.height);

zoomSdk

.drawImage({

imageData,

x: 0,

y: 0,

zIndex: 10

})

.then((ctx) => {

console.log("drawImage returned", ctx);

})

.catch((e) => {

console.log(e);

});

}

img.onload = () => {

draw(img);

};

img.src =

"https://9bae041b1da7.jp.ngrok.io/assets/1@2x.jpg?0.0.14?w=164&h=164&fit=crop&auto=format";

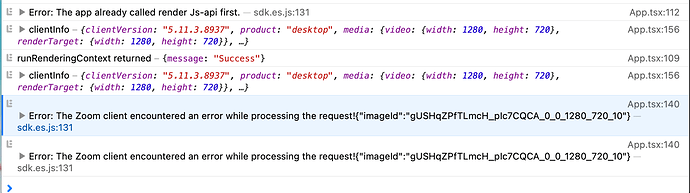

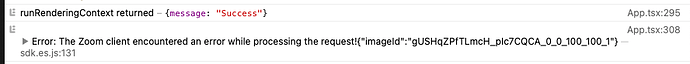

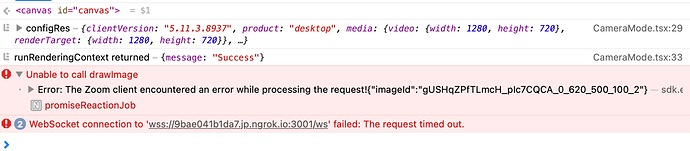

This results in the following console error:

Is something missing from the documentation, or is there a bug with this feature?

Thanks!