Description

Hey everyone, we run an interview platform. The online statuses of both users in the interview is synced to firebase. There are times where a user’s network drops and they are marked as offline. When this happens, we unmount our component which handles zoom and gracefully disconnect and destroy the client. Once they are marked back as online, everything gets reinitialised. We also use the live transcription feature by zoom.

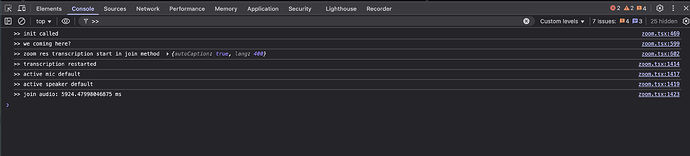

This is where we are facing issues. On the first run, the transcription starts correctly and we receive the live transcription event from zoom (caption-message). But on reconnect, we stop receiving it. To confirm if the audio’s are not getting muted, we are also explicitly unmuting audio but there’s still no luck. Below are logs on the first run and on disconnect.

Which Web Video SDK version?

2.2.0

Code snippets

This is what i do after joining the session

await this.zmClient.join(slug, signature, zoomScreenName);

if (isCandidate && isTranscriptionEnabled) {

console.log('>> we coming here?');

this.transcriptionClient = this.zmClient.getLiveTranscriptionClient();

const res = await this.transcriptionClient.startLiveTranscription();

console.log('>> zoom res transcription start in join method', res);

}

This is how i am joining audio

this.setState({ isAudioCallRequested: false });

const { t, ongoingAudioCall, isCandidate } = this.props;

const { isAudioJoined } = this.state;

const { mediaStream, micDeviceId, speakerDeviceId } = this.state;

const audioOptions = { ...this.audioOptions } as const;

if (micDeviceId) audioOptions.microphoneId = micDeviceId;

if (speakerDeviceId) audioOptions.speakerId = speakerDeviceId;

try {

if (ongoingAudioCall && isAudioJoined) return;

await mediaStream.startAudio(audioOptions);

await mediaStream.unmuteAudio();

if (isCandidate) {

this.transcriptionClient = this.zmClient.getLiveTranscriptionClient();

const res = await this.transcriptionClient.startLiveTranscription();

console.log('>> transcription restarted', res);

}

const activeMic = mediaStream.getActiveMicrophone();

console.log('>> active mic', activeMic);

const activeSpeaker = mediaStream.getActiveSpeaker();

console.log('>> active speaker', activeSpeaker);

track(DIAGNOSTIC_EVENTS.ZOOM_CONCALL_AUDIO_STARTED);

this.setState({ isAudioJoined: true });

} catch (e) {

console.error(t('error_starting_audio'), e);

if (e?.reason === ZOOM_ERRORS.USER_FORBIDDEN_MICROPHONE) {

this.setState({

error: { ...e, permissionError: true },

});

} else {

// show non-permission error only

const reason = e?.reason ? e.reason : t('rejoin_call');

console.log('>> error starting audio', e);

renderToast(`${t('error_starting_audio')}: ${reason}`);

}

track(DIAGNOSTIC_EVENTS.ZOOM_CONCALL_AUDIO_START_ERROR, {

reason: e?.reason,

});

}

This is what happens on disconnect

await this.zmClient.leave()

ZoomVideo.destroyClient();

**Screenshots**

First run

Second run

I can see the microphones are in use but i am not getting the live transcription events. I’m unsure what’s going wrong with the implementation.

Device (please complete the following information):

- Device: Macbook Pro M1

- OS: MacOS Tahoe 26.0.1 (25A362)

- Browser: Chrome

- Browser Version 141.0.7390.123 (Official Build) (arm64)